Integrating AI into Government Agencies

Overview

MetroStar launched its partnership in the Joint AI Test Infrastructure Capability (JATIC) program, a pivotal initiative aimed at bridging the gap between artificial intelligence (AI) potential and its practical application within the Department of Defense (DoD). This program significantly enhances the safety, efficiency, and effectiveness of military operations, providing a vital platform for the testing of AI technologies across the DoD, the Chief Digital and Artificial Intelligence Office (CDAO), and the AI Assurance Directorate.

Our engagement in JATIC is underscored by our early investment in AI through our Innovation Lab. This foresight enabled us to be at the forefront of AI integration within defense mechanisms. Our proprietary data experimentation platform, Onyx, supports this initiative. Developed on entirely free and open-source technologies, Onyx allows users to retain full data rights, offering unmatched flexibility and cost-effectiveness.

Overview

MetroStar launched its partnership in the Joint AI Test Infrastructure Capability (JATIC) program, a pivotal initiative aimed at bridging the gap between artificial intelligence (AI) potential and its practical application within the Department of Defense (DoD). This program significantly enhances the safety, efficiency, and effectiveness of military operations, providing a vital platform for the testing of AI technologies across the DoD, the Chief Digital and Artificial Intelligence Office (CDAO), and the AI Assurance Directorate.

Our engagement in JATIC is underscored by our early investment in AI through our Innovation Lab. This foresight enabled us to be at the forefront of AI integration within defense mechanisms. Our proprietary data experimentation platform, Onyx, supports this initiative. Developed on entirely free and open-source technologies, Onyx allows users to retain full data rights, offering unmatched flexibility and cost-effectiveness.

Background

Aligned with the DoD’s strategic imperative to incorporate AI technologies into operational domains, MetroStar’s efforts were rooted in strategic documents and annual goals outlined by the DoD.

The significance of this alignment was underscored by the DoD’s investment of $14.7 billion in AI R&D, reflecting a commitment to future-proofing defense capabilities. Moreover, the mission impact of the work was profound, as it directly contributed to ensuring AI-enabled systems (AIES) underwent rigorous testing, evaluation, and validation before deployment.

By partnering with the Test and Evaluation (T&E) community, JATIC facilitated the development of best practices for T&E of AI, thereby mitigating mission risks associated with AI system errors or unintended use. This collaborative effort propelled the DoD toward harnessing the significant potential of AI technologies while ensuring operational reliability and trustworthiness.

The mission of the DoD revolves around utilizing critical technologies such as AI to meet evolving mission needs and to stay ahead of near-peer adversaries. The emphasis on “trusted AI” by the Undersecretary for Research and Engineering highlights the significance of ensuring reliable AI-enabled systems. Through research and collaboration with the Test and Evaluation (T&E) community, JATIC aimed to define best practices for T&E of AI, thereby mitigating mission risks associated with AI-enabled systems. By enhancing the testing, evaluation, and trustworthiness of AI-enabled systems, the work of JATIC directly impacts the mission of the DoD, enabling the reliable deployment of AI technologies across critical defense applications.

Background

Aligned with the DoD’s strategic imperative to incorporate AI technologies into operational domains, MetroStar’s efforts were rooted in strategic documents and annual goals outlined by the DoD.

The significance of this alignment was underscored by the DoD’s investment of $14.7 billion in AI R&D, reflecting a commitment to future-proofing defense capabilities. Moreover, the mission impact of the work was profound, as it directly contributed to ensuring AI-enabled systems (AIES) underwent rigorous testing, evaluation, and validation before deployment.

By partnering with the Test and Evaluation (T&E) community, JATIC facilitated the development of best practices for T&E of AI, thereby mitigating mission risks associated with AI system errors or unintended use. This collaborative effort propelled the DoD toward harnessing the significant potential of AI technologies while ensuring operational reliability and trustworthiness.

The mission of the DoD revolves around utilizing critical technologies such as AI to meet evolving mission needs and to stay ahead of near-peer adversaries. The emphasis on “trusted AI” by the Undersecretary for Research and Engineering highlights the significance of ensuring reliable AI-enabled systems. Through research and collaboration with the Test and Evaluation (T&E) community, JATIC aimed to define best practices for T&E of AI, thereby mitigating mission risks associated with AI-enabled systems. By enhancing the testing, evaluation, and trustworthiness of AI-enabled systems, the work of JATIC directly impacts the mission of the DoD, enabling the reliable deployment of AI technologies across critical defense applications.

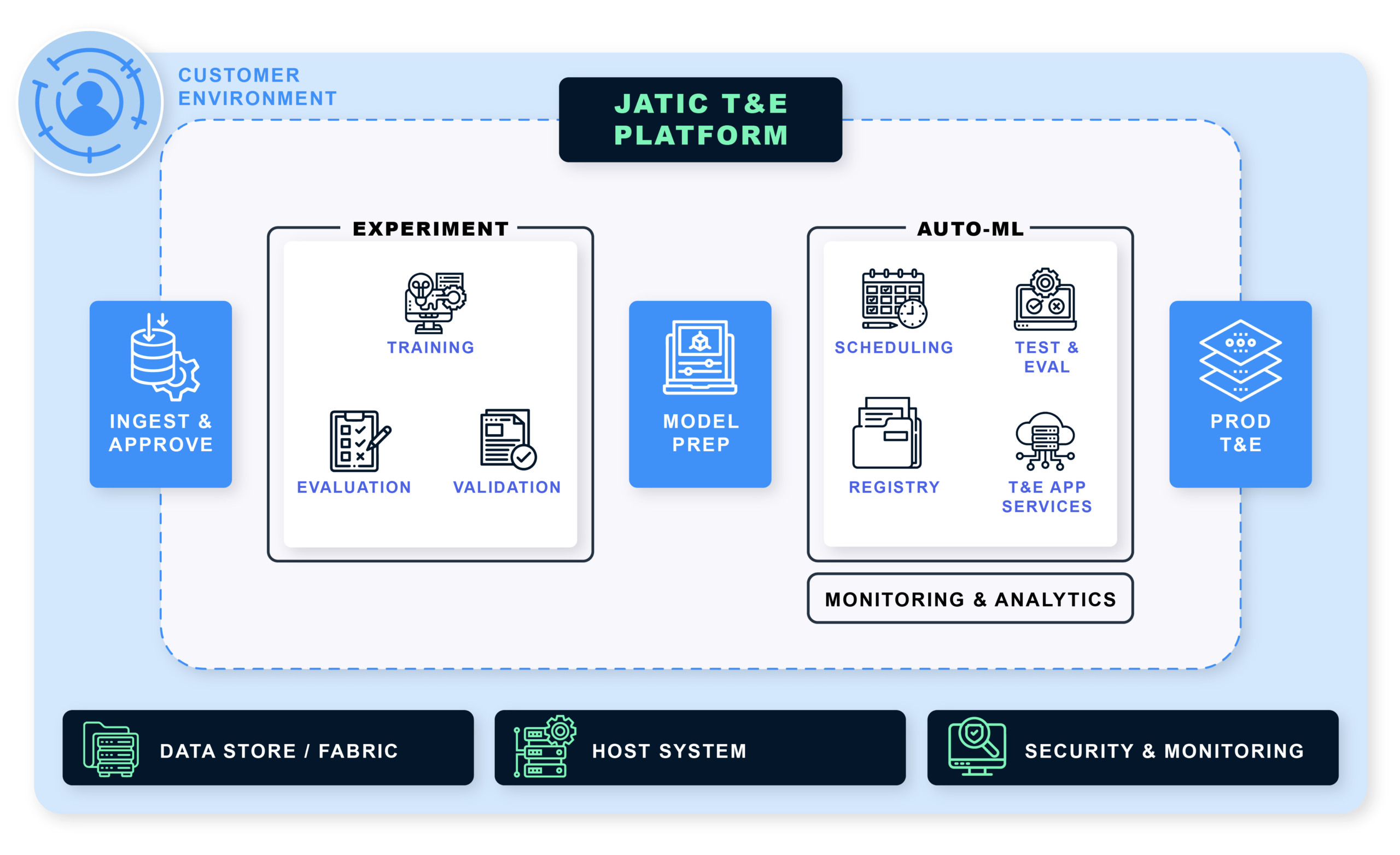

JATIC Product Focus Areas

Target Roles to Support within the ML Lifecycle

Key Objectives

Deliverables & Value Proposition

Measures of Success and How We are Enabling AI in Government

DEVELOP

Develop the T&E Platform

- Data Hub: Database / Object Store / Data Versioning

- Model Hub: AI/ML Model Registry

- Support T&E Tools

- Workflow Orchestration Engine / Workflow & Experiment Tracking

- IDE / Interactive Python Notebooks; Python Environment Management

SIMPLIFY

Simplify the Deployment of the Platform

- Automatic Infrastructure Provisioning

- Single Interface Environment Management

- Support Local Machine, On-Premise, and On-Cloud

- Multi-GPU & Resource Management

ENABLE

Enable T&E Engineer to Use Platform and Libraries with Ease

- Configure and Run Tests

- Visualization / Dashboards

- IDE / Interactive Python Notebooks

- Report Generation

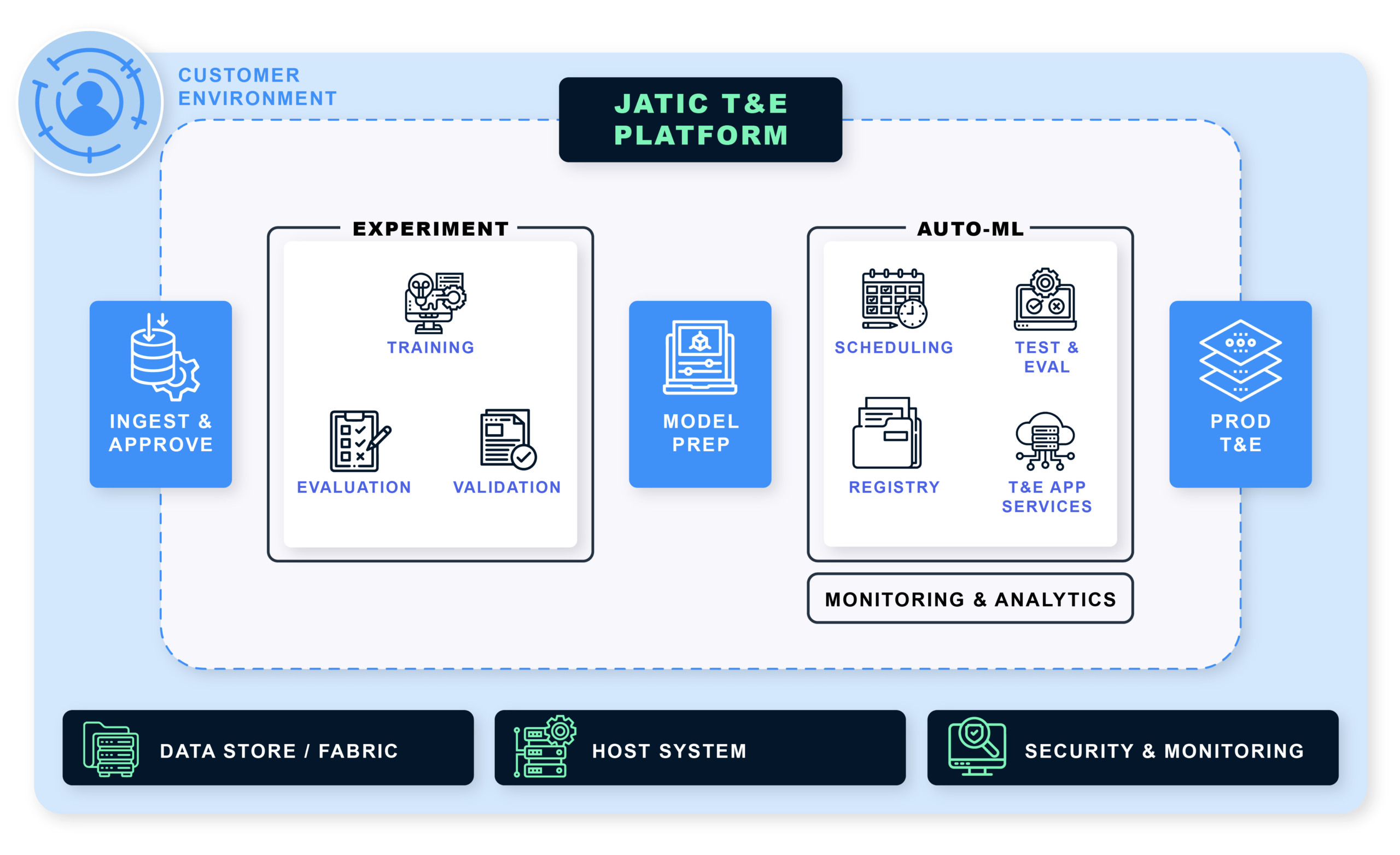

Deliverables & Value Proposition

Measures of Success and How We are Enabling AI in Government

DEVELOP

Develop the T&E Platform

- Data Hub: Database / Object Store / Data Versioning

- Model Hub: AI/ML Model Registry

- Support T&E Tools

- Workflow Orchestration Engine / Workflow & Experiment Tracking

- IDE / Interactive Python Notebooks; Python Environment Management

SIMPLIFY

Simplify the Deployment of the Platform

- Automatic Infrastructure Provisioning

- Single Interface Environment Management

- Support Local Machine, On-Premise, and On-Cloud

- Multi-GPU & Resource Management

ENABLE

Enable T&E Engineer to Use Platform and Libraries with Ease

- Configure and Run Tests

- Visualization / Dashboards

- IDE / Interactive Python Notebooks

- Report Generation